By: Kevin Reuning and Adrian Dooley

In the digital age, predicting the outcome of U.S. elections has become both an art and a science, captivating millions of Americans every election cycle. Although political scientists have a long history of attempting to predict and explain elections, Nate Silver’s FiveThirtyEight blog brought these models to many more Americans in 2008. That year, Silver predicted an Obama victory in the popular vote by 6.1 percentage points and an end electoral college vote of around 350, which ended up just below Obama’s actual margin of 7.3 percentage points and 365 electoral college votes. Silver’s website also garnered over 5 million visits on election day.[1]

By our count, there are at least seven of these forecasting models including models developed by The Economist and ABC News. Each of these models combines a wide array of data to generate a specific quantitative prediction for their respective forecasts (note that the way these predictions are displayed varies). In this blog we outline how these different forecast models work, the type of information they use, and the assumptions they make (implicitly or explicitly).

It is worth noting what forecast models are not. Forecast models do not include polling aggregators like The New York Times and Real Clear Politics’s aggregators. Unlike forecast models, polling aggregators are designed to provide a snapshot of the election as it stands. They do not explicitly project forward who is likely to win. Forecast models are also different than the expert intuition models such as Keys to the White House, Sabato’s Crystal Ball, and Cook Political Report. The output of these expert models varies significantly, with some focusing on state-level predictions and some overall election-level predictions. The inputs to these models also vary significantly and are often not explicitly stated. Instead, an expert uses different cues to identify who they think will win based on past experience. To clarify the landscape of election forecasting, it’s essential to distinguish between the different types of models available. Table 1 outlines the primary categories, detailing their methodologies and providing examples of each.

Table 1: Types of Election Models

| TYPE | DESCRIPTIONS | EXAMPLES |

|---|---|---|

| Forecasting | Utilizes a combination of polling data and other quantitative metrics to produce specific predictions on election outcomes. These models often incorporate statistical techniques to adjust for biases and trends. | The Economist, 538, Decision Desk HQ |

| Polling Aggregation | Aggregates multiple polls to provide a current snapshot of the race without projecting future outcomes. These aggregators typically average poll results to show the present state of voter preferences. | New York Times, Real Clear Polling |

| Expert Intuition | Relies on the qualitative and quantitative insights of political analysts and experts. These models depend on the experts’ experience and judgment to assess various indicators and predict likely winners. | Keys to the White House, Sabato’s Crystal Ball, Cook Political Report |

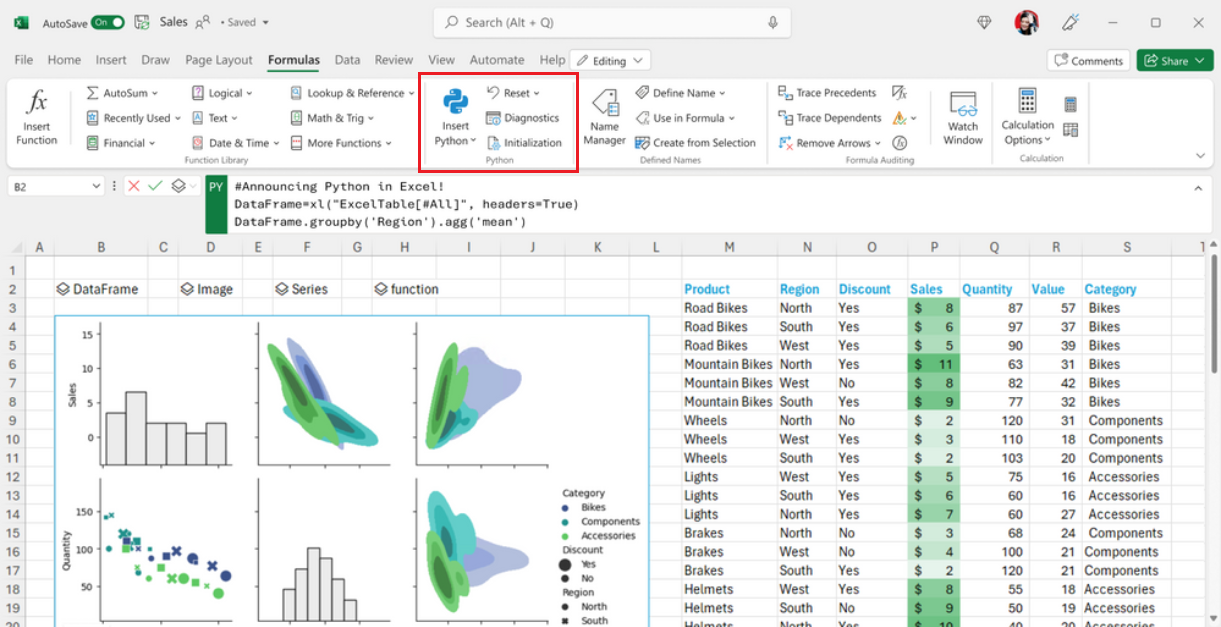

What is in a forecasting model?

Polling

To better understand election forecasts we collected information from a variety of prominent forecasts; the full list of models examined is in Table 2. All these models incorporate polling data, but a critical initial decision is determining which polls to include or exclude. Surprisingly, most election models lack explicit criteria for poll inclusion. FiveThirtyEight provides the most comprehensive rules, requiring methodological transparency and that pollsters meet ethical standards as outlined by the American Association for Public Opinion Research (AAPOR). Silver Bulletin uses similar rules (although has a slightly different list of “banned” pollsters). In addition, several (JHK and possibly The Economist) rely on FiveThirtyEight’s database of polls. In contrast to this, Race to the White House and Decision Desk both maintain their own polling database with very little transparency about how polls are added to it. Overall though it appears that in many cases the polling data used is the same across the different forecasts.

Although the forecasts start with much of the same polling data they immediately begin to diverge significantly in how they deal with the variation within pollsters. Polls can vary in many ways including the size of the sample, their modality (phones with a live caller, phones with automated messages, online, etc.), how they invite individuals to participate (phone, text, online panel), decisions made on how to weight the sample, and the funding of the poll. Most forecasts attempt to correct polls for these different effects by estimating models of the polls to calculate the bias induced by different polling decisions. After corrections are made the polls are averaged with several forecasts (FiveThirtyEight, Silver Bulletin, and JHK) weighting each poll as a function of the sample size, recency, and FiveThirtyEight pollster rating. One interesting exception is DecisionDesk, which simply fits a cubic spline to the polls.

Fundamentals

Nearly every forecasting model incorporates non-polling data, referred to as ‘fundamentals,’ which aim to capture the underlying factors influencing the election. This can include a wide array of data such as economic indicators, historical partisan lean, and consumer outlook. There is a significant variation in both how transparent models are with their fundamentals and which fundamentals they include. For example, FiveThirtyEight includes 11 different economic variables and a handful of political variables at the state or national level. Race to the White House in contrast includes 10 economic variables, that often overlap with FiveThirtyEight’s. For example, both include the University of Michigan’s consumer sentiment index and inflation measured by the CPI. They also have small differences such as the Race To the White House including the NASDAQ Composite Index while FiveThirtyEight includes the S&P 500 and FiveThirtyEight including slightly different measures of income. There are bigger differences for political variables. FiveThirtyEight mainly focuses on historic partisan lean while also accounting for specifics of the candidate (incumbency and home state advantages). Race to the White House though also pulls fundraising data and information from special elections.

Many of the other remaining models are not as transparent in the data that is included. The Economist provides important details in the process used to select the variables at the national level[2] which they say identifies a set of variables that are similar to Alan Abramowitz’s Time for Change Model (which includes presidential approval, change in real GNP, and an indicator for incumbency). Other models often only identify that they have created an “economic index” though they often go into deep detail on assumptions over incumbency. It isn’t clear the degree that different measures of economic indicators matter, although The Economist’s approach does demonstrate that there are principled ways to build these models of fundamentals.

State Correlation

Another important component for forecasts are the state-level estimates. As should be clear, the US presidential election is not decided by popular vote but is instead decided by electors tied to specific states. This means that the national level popular vote is only somewhat informative over who will win (see Hilary Clinton in 2016 and Al Gore in 2000). State-level estimates are then necessary, but state-level polling is significantly less common than national-level polls. In response to this most forecasts attempt to connect polling from one state to polling from other states (as well as to national polls). They do this by incorporating state-level correlations either explicitly in their model of polls or in their simulations. Most forecasts use ancillary data to estimate similarities across states. For example, Decision Desk HQ uses a “comprehensive set of demographic, geographic, and political variables” to identify 10 regions. In their end simulations, each region has its own error which moves state-level polls in the same direction for states in those regions. Others, like The Economist, directly incorporate state-level relationships into their model. Similar to Decision Desk HQ though The Economist also relies on a set of demographic and political factors to measure how similar two states are.

Table 2: Forecast Models

| Publisher | Poll Sources | Economic Fundamentals | State Correlation |

|---|---|---|---|

| 24cast | Relies on 538 | Yes | No |

| Cnalysis | Relies on JHK average | No | ? |

| Decision Desk HQ | No explicit guidelines | No | Yes |

| FiveThirtyEight | Specific requirements | Yes | Yes |

| JHK Forecast | Relies on 538 | Yes | Yes |

| Princeton Election Consortium | Relies on 538 | No | No |

| Race to the WH | No explicit guidelines | Yes | No |

| Silver Bulletin | Specific requirements | ? | Yes |

| The Economist | No explicit guidelines, possibly relies on 538 | Yes | Yes |

Conclusion

Election forecasting models have grown both in complexity and profitability over the past two decades. This growth presents a paradox: as models become more sophisticated, the need for transparency increases to understand the underlying assumptions and methodologies. Currently, FiveThirtyEight is the only model among those discussed that openly shares a significant portion of its data, model structure, and outputs. The reason is likely a function of the second trend. Other models like Silver Bulletin, Decision Desk HQ, and The Economist operate behind paywalls, which, while understandable for funding purposes, hinder comparative analysis and public understanding. Given that it is unlikely for these paywalls to fall it would be a positive step for the people behind these models to commit to making their work public after the election. This is a big ask. It is likely that some of these models will be proven wrong, but we can better learn from them only if their details are made public.

[1] https://www.nytimes.com/2008/11/10/business/media/10silver.html

[2] They estimate an elastic-net regularization model using leave-one-out-cross-validation to identify the optimal amount of shrinkage.